Welcome to Partha’s Homepage!

I am an Applied Scientist at Coalition Inc., where I focus on building agentic platform that leverage internal knowledge and collaborative intelligence for enterprise applications. My work centers on designing enterprise scale knowledge platform, LLM-driven solutions that integrate reasoning, automation, and data understanding to enhance decision-making and customer intelligence.

Previously, I was a Deep Learning Researcher at Huawei Canada, specializing in Vision-Language models within the AI-Generated Content Group. My work focuses on building accurate and generalizable Multi-Modal Language Models (MLLM) that seamlessly integrate visual and textual understanding for real-world applications.

Before this, I completed my Ph.D. at the University of Waterloo, where I developed machine learning techniques to detect software bugs directly from source code. Using a combination of Reinforcement Learning and language modeling, I created AI-driven solutions to identify bugs/vulnerabilities with high precision.

I also worked as an Applied Scientist II Intern at Amazon Ads, focusing on ranking models and ad targeting. This involved optimizing models to enhance ad relevance and user engagement.

Research Interest

I am broadly interested in building applications using large language models (LLMs), multi-modal large language models (MLLMs), and vision-language models (VLMs), particularly in:

- Search & Ranking Systems – Developing learning-to-rank models and optimizing multi-modal deep learning models for applications like search, recommendation, and ad personalization.

- Vision-Language & Multi-Modal AI – Enhancing model performance for image-text understanding, instruction generation, and multi-modal reasoning using VLMs and MLLMs.

- Multi-Agent Collaboration Systems – Building enterprise-scale, data-driven agentic system that integrate internal knowledge bases, automate reasoning across heterogeneous data, and enable collaborative AI workflows for operational efficiency and customer intelligence.

- Software Engineering & Bug Localization – Applying LLMs and reinforcement learning for bug localization, software vulnerability detection, and ranking of source code files.

For more details about my work, please explore my projects and related publications.

Work Experience

Applied Scientist

May, 2026 - Present

Advancing Agentic Knowledge Platforms for Customer Success

Built an enterprise knowledge platform with automated ingestion, intelligent tagging, and agentic enrichment to power a Customer 360 system and chatbot for improved support efficiency. Achieved 30% reduction in human agent workload, 2-day faster resolution, and 23% higher customer satisfaction.

Deep Learning Researcher

December, 2024 - April, 2026

Enhancing AI-driven content generation with vision-language models

Developed and optimized vision-language models for AI-driven content generation, improving image aesthetics, user engagement, and system efficiency. Achieved state-of-the-art advancements in aesthetic assessment, instruction clarity, and model serving performance through advanced fine-tuning and optimization techniques.

Applied Scientist II Intern

August, 2023 - December, 2023

Trio: Enhancing model performance through co-purchased product-integration

Built a deep learning model to curate advertisements tailored to customers, enhancing the alignment between ads and customers by 3% across 21 Amazon marketplaces. Implemented five novel features and employed multi-objective training techniques to optimize the model's overall performance.

Software Developer

October, 2018 - December, 2020

Enhancing search ranking and personalization for higher engagement and revenue impact

Developed an intelligent search system that personalizes address and point of interest (POI) retrieval by leveraging user attributes, history, and preferences, improving engagement with a 21.4% increase in CTR and a 5% reduction in search abandonment. Enhanced monetization by integrating intent-based advertisements, driving $35K in revenue within two months. To support scalability, built a microservices-based streaming platform serving 15K IoT devices and implemented a distributed data pipeline for efficient data validation and sanitization, reducing manual effort by 30%.

Projects

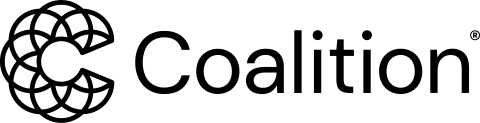

RLocator: Reinforcement Learning for Bug Localization

Reinforcement Learning agent designed to localize bugs by learning from developer feedback and analyzing bug reports.

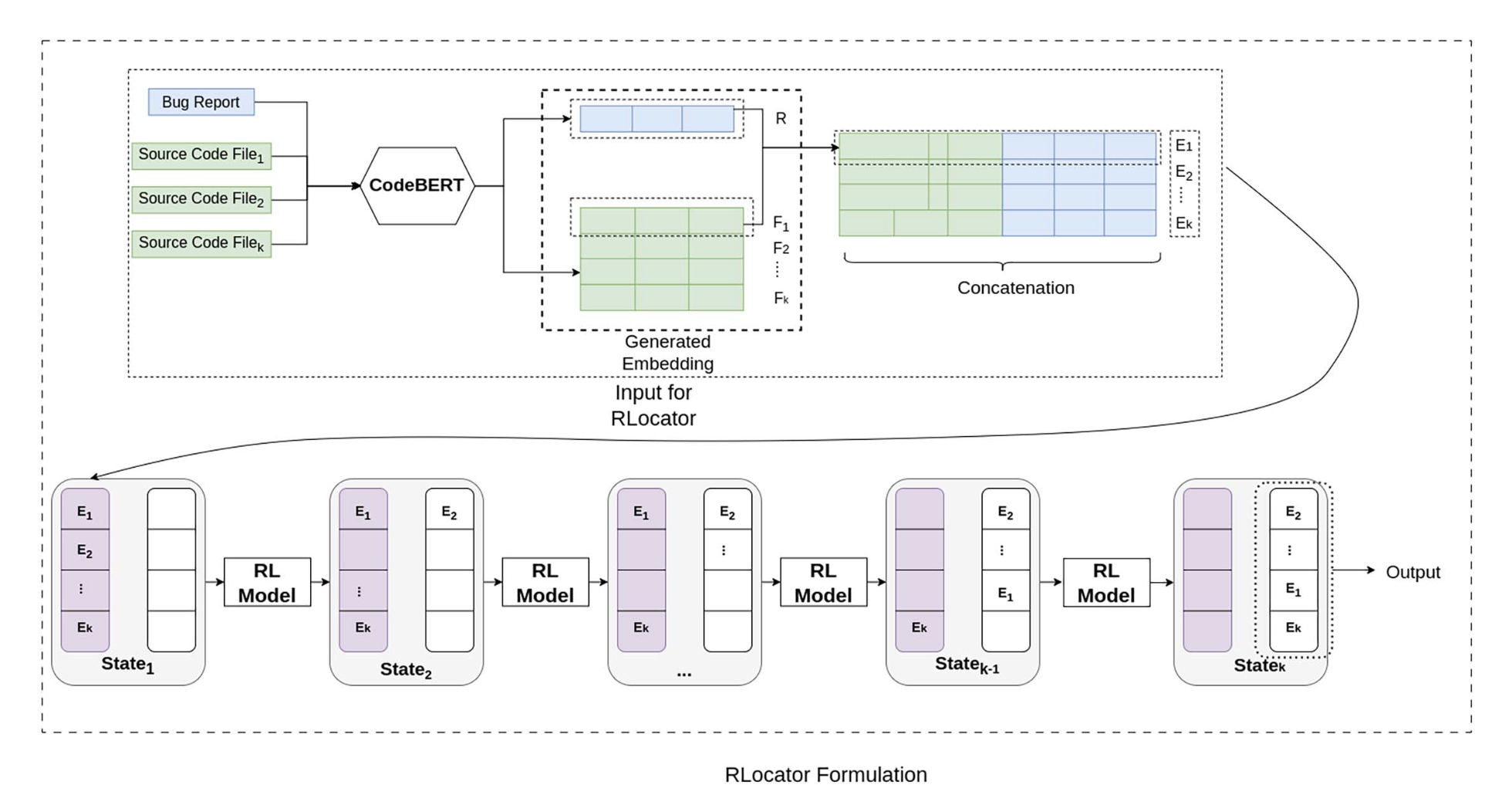

BLAZE: Cross-Language and Cross-Project Bug Localization via Dynamic Chunking and Hard Example Learning

BLAZE is a hybrid retrieval system that integrates syntactic and semantic search, powered by an embedding model trained with in-batch hard example mining. It outperforms OpenAI's generation 3 embedding model by up to 38% in bug localization tasks.

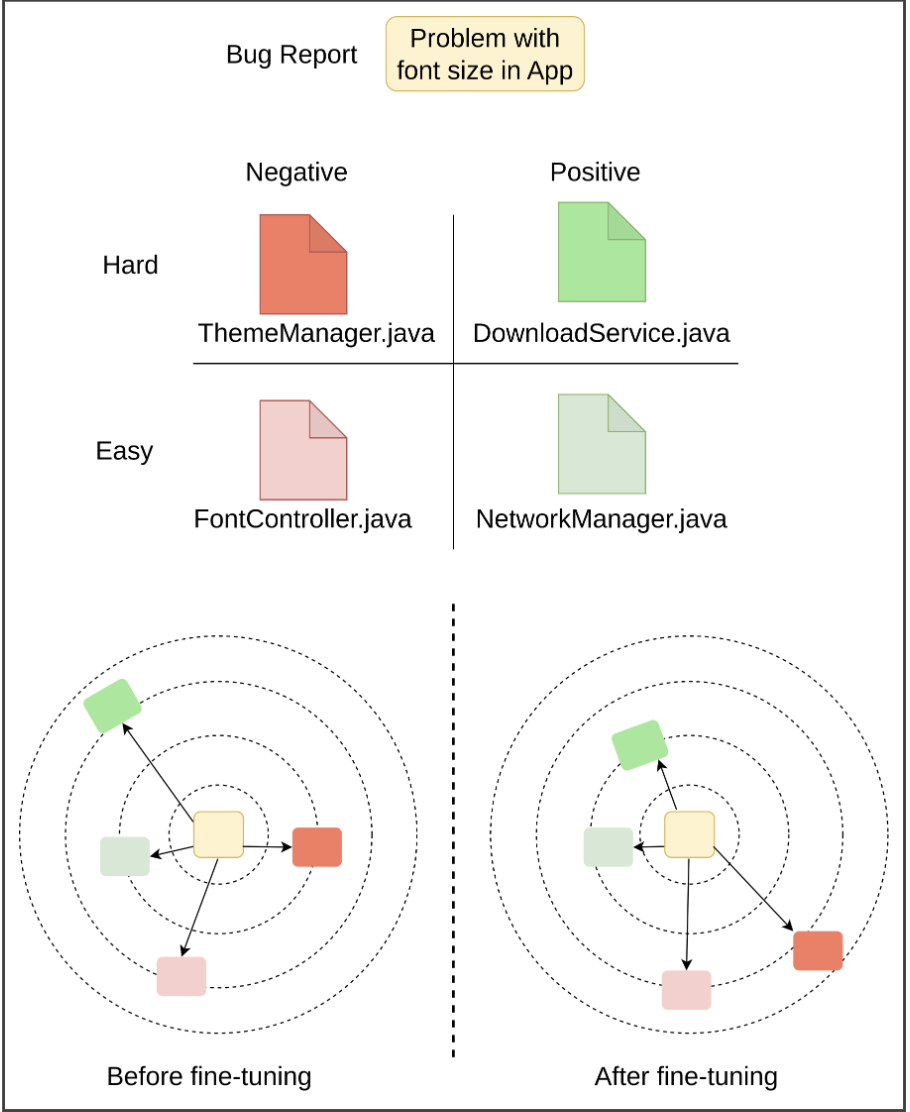

Revisiting the Performance of Deep Learning-Based Vulnerability Detection on Realistic Datasets

We demonstrated that the performance of existing vulnerability detection models lacks generalizability due to a bias in the dataset curation pipeline. To address this issue, we proposed a new dataset curation technique and evaluated six different models, including CodeLlama and Mixtral. Our results show that models trained on the newly curated dataset exhibit a 30% improvement in generalization performance.

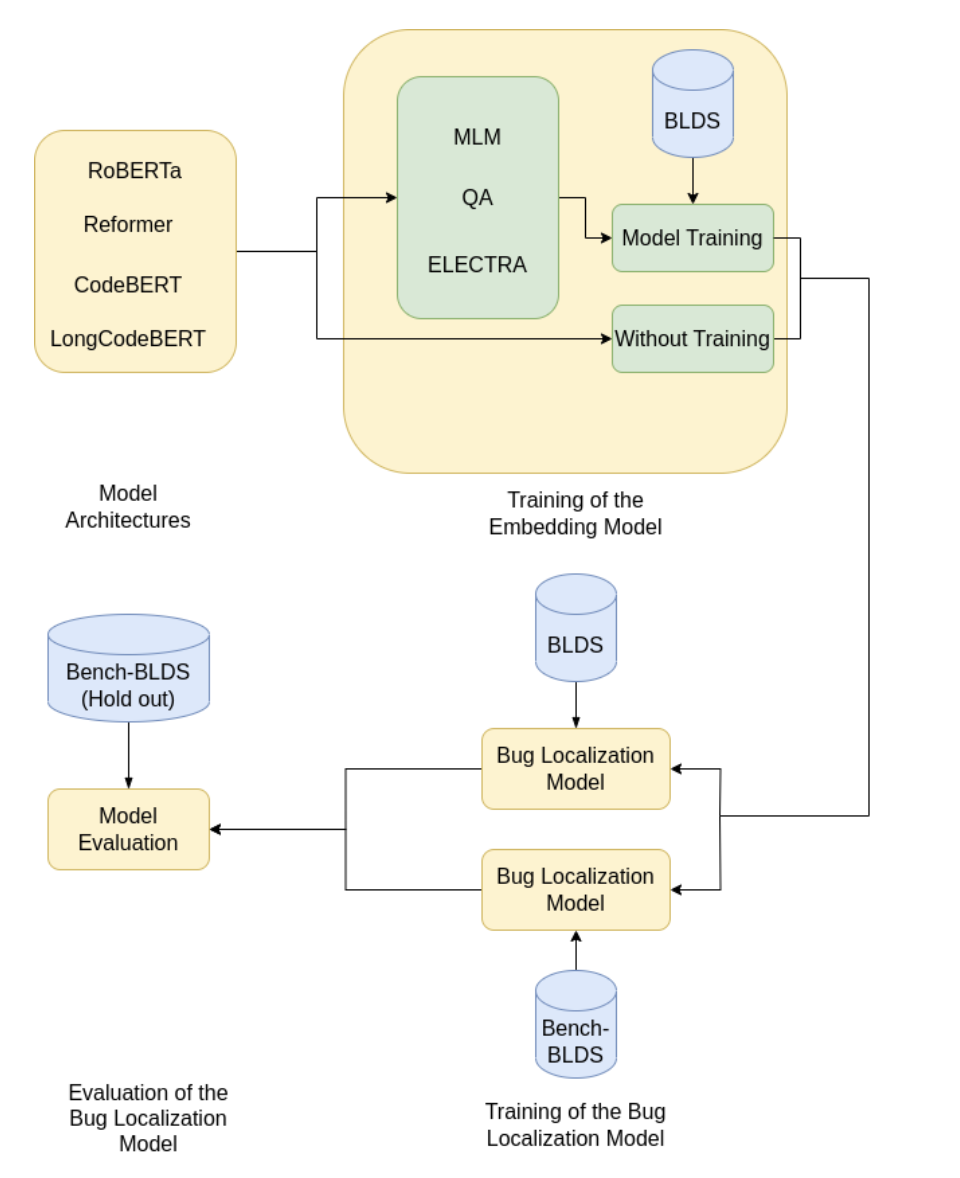

Aligning Programming Language and Natural Language: Exploring Design Choices in Multi-Modal Transformer-Based Embedding for Bug Localization

We evaluated 32 different embedding-based models to determine the most effective training technique for developing embedding models for source code. Our analysis revealed that adversarial techniques produce more robust and generalizable models.

Publications

BLAZE: Cross-Language and Cross-Project Bug Localization via Dynamic Chunking and Hard Example Learning Partha Chakraborty,

IEEE Transactions on Software Engineering (TSE), 2025.

Rlocator: Reinforcement learning for bug localization Partha Chakraborty,

IEEE Transactions on Software Engineering (TSE), 2024.

Revisiting the Performance of Deep Learning-Based Vulnerability Detection on Realistic Datasets Partha Chakraborty,

IEEE Transactions on Software Engineering (TSE), 2024.

Aligning Programming Language and Natural Language: Exploring Design Choices in Multi-Modal Transformer-Based Embedding for Bug Localization Partha Chakraborty,

Third ACM/IEEE International Workshop on NL-based Software Engineering (NLBSE), 2024.

A Survey-Based Qualitative Study to Characterize Expectations of Software Developers from Five Stakeholders Partha Chakraborty,

Proceedings of the 15th ACM/IEEE International Symposium on Empirical Software Engineering and Measurement (ESEM), 2021.

How do developers discuss and support new programming languages in technical Q&A site? An empirical study of Go, Swift, and Rust in Stack Overflow Partha Chakraborty,

Information and Software Technology (IST), 2021.

Understanding the motivations, challenges and needs of blockchain software developers: A survey Partha Chakraborty

Empirical Software Engineering (EMSE), 2019.

Empirical Analysis of the Growth and Challenges of New Programming Languages Partha Chakraborty,

IEEE 43rd Annual Computer Software and Applications Conference (COMPSAC), 2019.

Understanding the software development practices of blockchain projects: a survey Partha Chakraborty,

Proceedings of the 12th ACM/IEEE International Symposium on Empirical Software Engineering and Measurement (ESEM), 2018.

Education

University of Waterloo

Ph.D. in Computer Science January 2021 - November 2024- Specialization: Artificial Intelligence

- Advisor: Professor Meiyappan Nagappan

- Thesis Dissertation: Optimizing Automated Bug Localization for Practical Use [Dissertation]

Bangladesh University of Engineering and Technology

B.Sc. in Computer Science and Engineering Jul 2014 - Oct 2018- Major: Software Engineering

- Advisor: Rifat Shahriyar

- Thesis: An Empirical Study on the Growth of New Languages in Stack Overflow

Services

- Program Committee Member: Mining Software Repository 2025 Data and Tool Showcase Track

- Reviewer:

- IEEE Transactions on Software Engineering (TSE) - 2022, 2023, 2024

- Empirical Software Engineering (EMSE) - 2024

- ACM Transactions on Software Engineering and Methodology (TOSEM) - 2024

- Teaching:

- TA for CSE 446 - Software Design and Architecture

- TA for CSE 348 - Introduction to Database Systems

- TA for CSE 230 - Introduction to Computers and Computer Systems

- Mentored 1 UWaterloo CS undergraduate student on a research project.

Skills

- Language: Java, Python, C++, C

- Framework: PyTorch, TensorFlow, Android, Django, Onnx

- Databases: MySQL, PostgreSQL, Oracle, SQLite, Pinecone, Elastic Search, MilVus

- MLOps Tools: Weights & Biases, MLflow

- Tools: Android Studio, Google Colab Platform, Apache Spark, Ollama, Slurm

- Cloud: AWS, Google BigQuery, SageMaker

- Others: LATEX, Linux, Shell Script, Git